Problem analysis

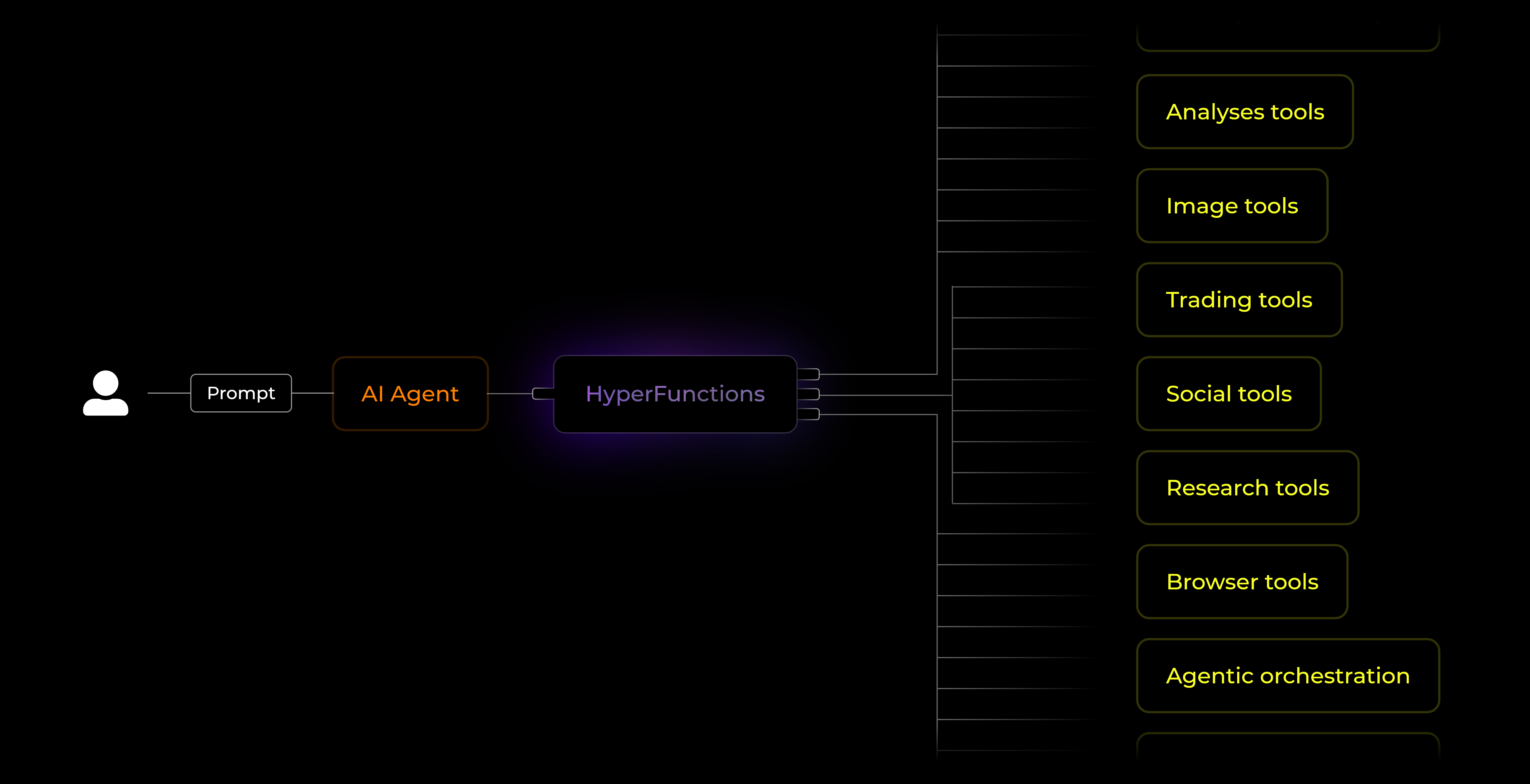

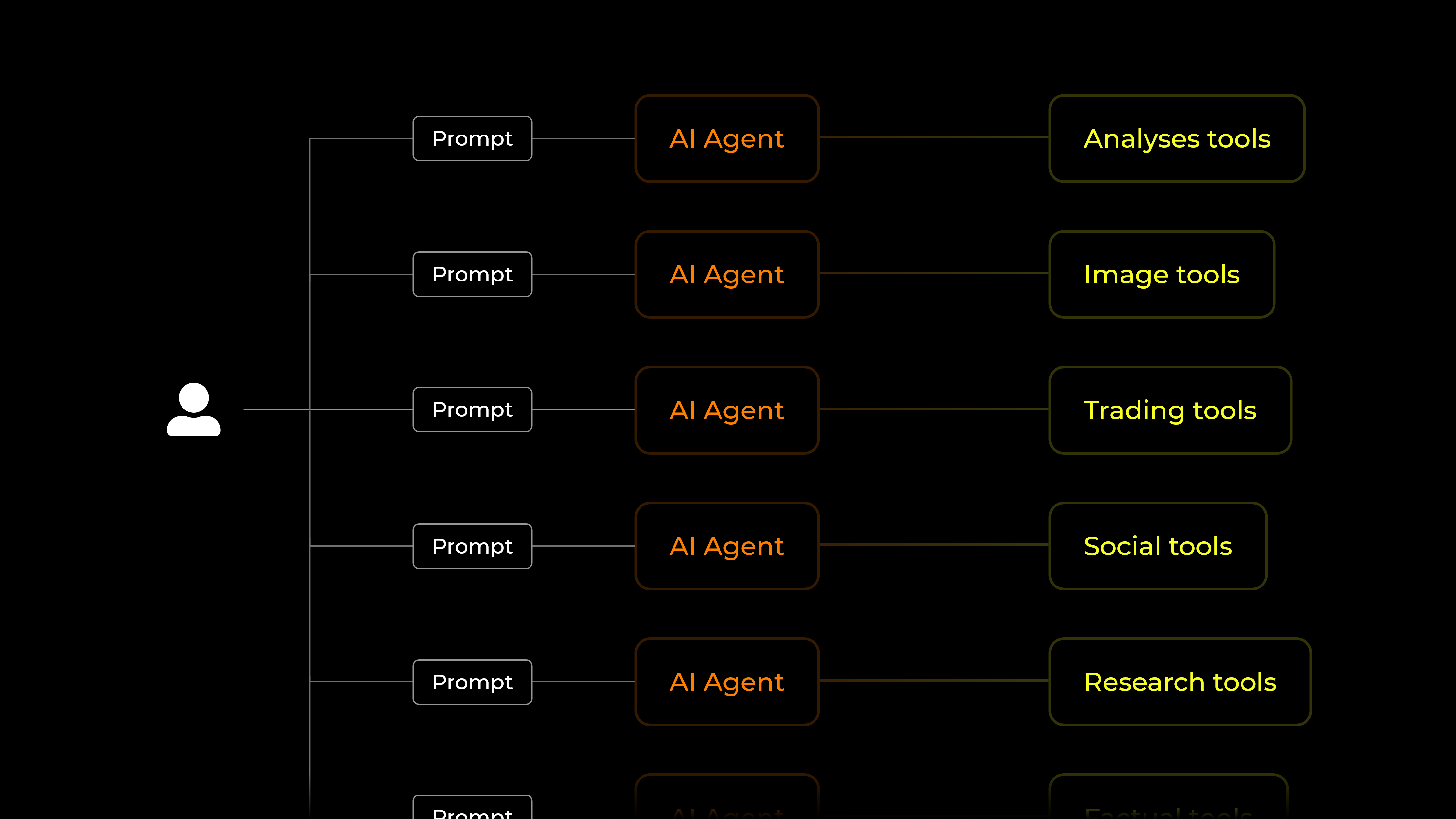

Function calling allows LLMs to interact with external services, largly increasing the capabilities and applicability of AI. Such applications are known as AI agents. Current agentic applications predominantly fragment their solutions across multiple agents, failing to address the fundamental problem of scattered functionality. This fragmentation persists due to the technical constraints of function calling. Current solutions cap at around 20 functions per agent. The current approach of using multiple agents to handle different tasks perpetuates the very problem it aims to solve: users must still navigate multiple interfaces and systems, merely replacing traditional app fragmentation with AI agent fragmentation.

Solution

HyperFunctions is the world’s first hybrid retrieval pipeline, enabling any single AI agent to seamlessly access and integrate thousands of functions.Hyper-scalable

Integrate thousands of functions in any single AI agent.

Simple Integration

Drop-in replacement for standard function calling.

Framework agnostic

HyperFunctions is framework agnostic. It’s compatible with OpenAI Swarms, ARC RIG, LangChain/LangGraph, and all others.

AI provider agnostic

HyperFunctions is AI provider agnostic. Leverage any state-of-the-art LLM of your choice.

Language agnostic

HyperFunctions is language agnostic. Integrate it in Typescript, Python, Rust, or any other language.